Kubernetes

一、基础知识

1. 概念和术语

2. Kubernetes特性

3. 集群组件

4. 抽象对象

5. 镜像加速下载

二、安装部署kubeadm

1. 基础环境准备

2. 安装runtime容器(Docker)

3. 安装runtime容器(Contained)

4. Containerd进阶使用

5. 部署kubernets集群

6. 部署calico网络组件

7. 部署NFS文件存储

8. 部署ingress-nginx代理

9. 部署helm包管理工具

10. 部署traefik代理

11. 部署dashboard管理面板(官方)

12. 部署kubesphere管理面板(推荐)

12. 部署metrics监控组件

13. 部署Prometheus监控

14. 部署elk日志收集

15. 部署Harbor私有镜像仓库

16. 部署minIO对象存储

17. 部署jenkins持续集成工具

三、kubectl命令

1. 命令格式

2.node操作常用命令

3. pod常用命令

4.控制器常用命令

5.service常用命令

6.存储常用命令

7.日常命令总结

8. kubectl常用命令

四、资源对象

1. K8S中的资源对象

2. yuml文件

3. Kuberbetes YAML 字段大全

4. 管理Namespace资源

5. 标签与标签选择器

6. Pod资源对象

7. Pod生命周期与探针

8. 资源需求与限制

9. Pod服务质量(优先级)

五、资源控制器

1. Pod控制器

2. ReplicaSet控制器

3. Deployment控制器

4. DaemonSet控制器

5. Job控制器

6. CronJob控制器

7. StatefulSet控制器

8. PDB中断预算

六、Service和Ingress

1. Service资源介绍

2. 服务发现

3. Service(ClusterIP)

4. Service(NodePort)

5. Service(LoadBalancer)

6. Service(ExternalName)

7. 自定义Endpoints

8. HeadlessService

9. Ingress资源

10. nginx-Ingress案例

七、Traefik

1. 知识点梳理

2. 简介

3. 部署与配置

4. 路由(IngressRoute)

5. 中间件(Middleware)

6. 服务(TraefikService)

7. 插件

8. traefikhub

9. 配置发现(Consul)

10. 配置发现(Etcd)

八、存储

1. 配置集合ConfigMap

6. downwardAPI存储卷

3. 临时存储emptyDir

2. 敏感信息Secret

5. 持久存储卷

4. 节点存储hostPath

7. 本地持久化存储localpv

九、rook

1. rook简介

2. ceph

3. rook部署

4. rbd块存储服务

5. cephfs共享文件存储

6. RGW对象存储服务

7. 维护rook存储

十、网络

1. 网络概述

2. 网络类型

3. flannel网络插件

4. 网络策略

5. 网络与策略实例

十一、安全

1. 安全上下文

2. 访问控制

3. 认证

4. 鉴权

5. 准入控制

6. 示例

十二、pod调度

1. 调度器概述

2. label标签调度

3. node亲和调度

4. pod亲和调度

5. 污点和容忍度

6. 固定节点调度

十三、系统扩展

1. 自定义资源类型(CRD)

2. 自定义控制器

十四、资源指标与HPA

1. 资源监控及资源指标

2. 监控组件安装

3. 资源指标及其应用

4. 自动弹性缩放

十五、helm

1. helm基础

2. helm安装

3. helm常用命令

4. HelmCharts

5. 自定义Charts

6. helm导出yaml文件

十六、k8s高可用部署

1. kubeadm高可用部署

2. 离线二进制部署k8s

3. 其他高可用部署方式

十七、日常维护

1. 修改节点pod个数上限

2. 集群证书过期更换

3. 更改证书有效期

4. k8s版本升级

5. 添加work节点

6. master节点启用pod调度

7. 集群以外节点控制k8s集群

8. 删除本地集群

9. 日常错误排查

10. 节点维护状态

11. kustomize多环境管理

12. ETCD节点故障修复

13. 集群hosts记录

14. 利用Velero对K8S集群备份还原与迁移

15. 解决K8s Namespace无法正常删除的问题

16. 删除含指定名称的所有资源

十八、k8s考题

1. 准备工作

2. 故障排除

3. 工作负载和调度

4. 服务和网络

5. 存储

6. 集群架构、安装和配置

本文档使用 MrDoc 发布

-

+

首页

2. 离线二进制部署k8s

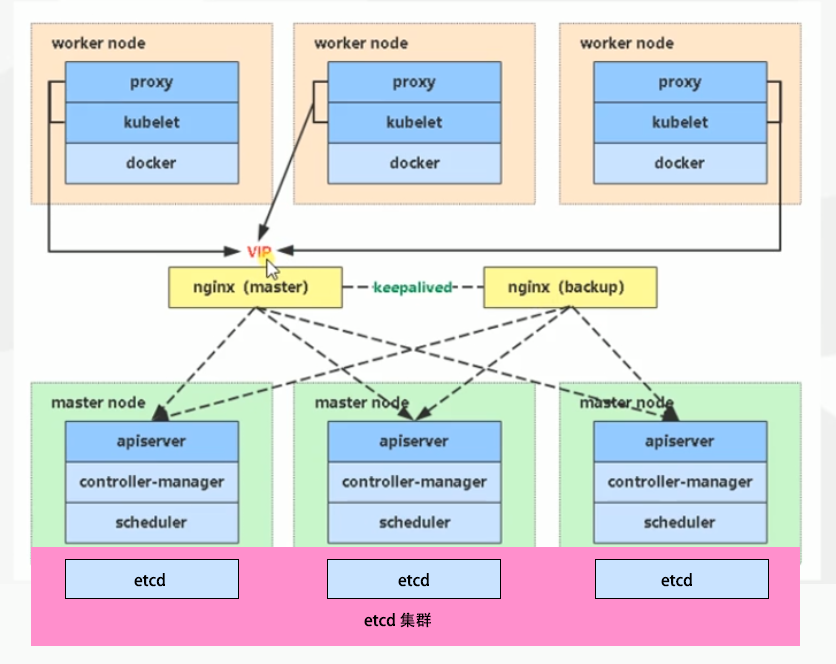

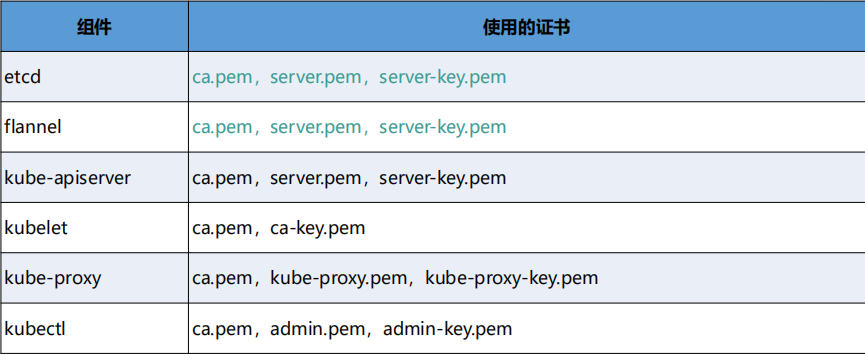

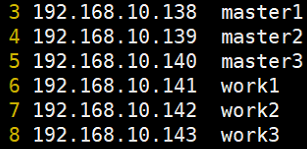

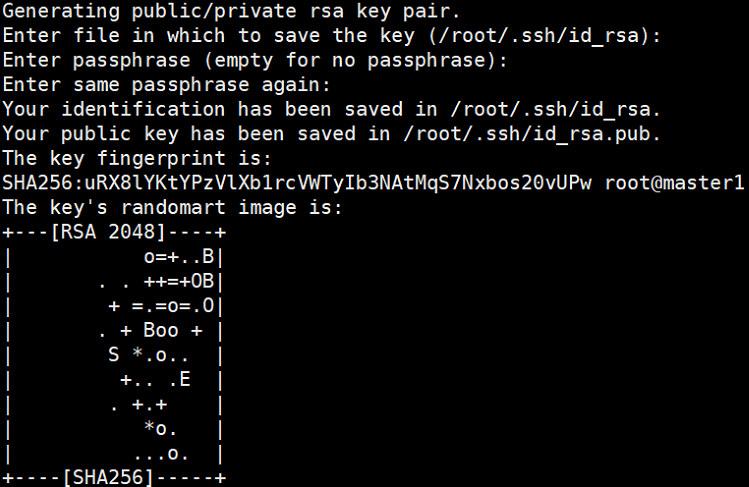

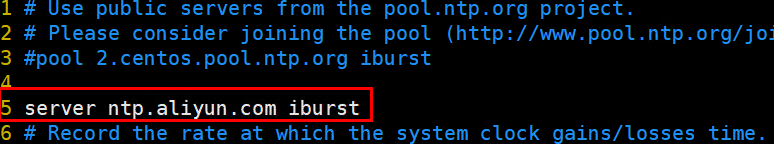

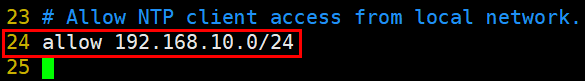

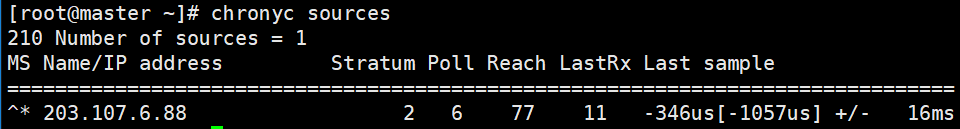

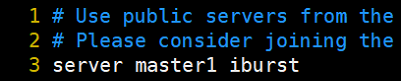

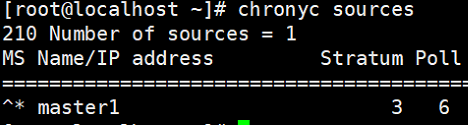

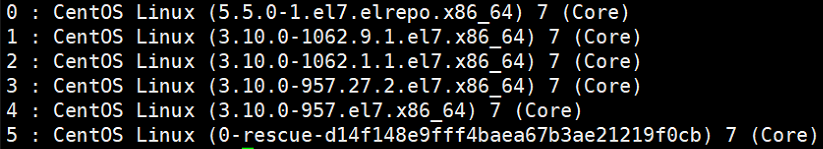

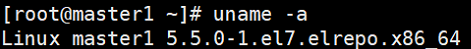

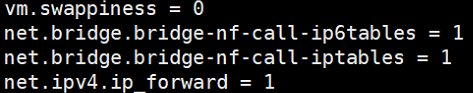

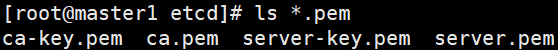

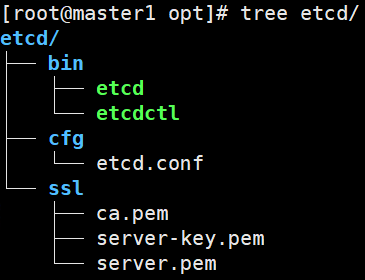

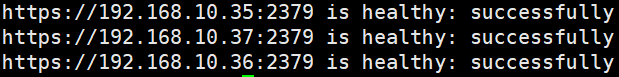

# 一、服务器规划 1. 集群架构  2. 服务器角色 | 主机名 | ip | 主机配置 | 组件 | 用途 | | --- | --- | --- | --- | --- | | master1 | 192.168.10.11 | 4C4G | kube-apiserver kube-controller-manager kube-scheduler etcd flannel | 控制节点1 | | master2 | 192.168.10.12 | 4C4G | kube-apiserver kube-controller-manager kube-scheduler etcd flannel | 控制节点2 | | master3 | 192.168.10.140 | 4C4G | kube-apiserver kube-controller-manager kube-scheduler etcd flannel | 控制节点3 | | work1 | 192.168.10.141 | 4C4G | kubelet kube-proxy docker | 工作节点1 | | work2 | 192.168.10.142 | 4C4G | kubelet kube-proxy docker | 工作节点2 | | work3 | 192.168.10.143 | 4C4G | kubelet kube-proxy docker | 工作节点3 | | Load Balancer (Master) | | 2C2G | Nginx keepalived | 负载均衡高可用 | | Load Balancer (Backup) | | 2C2G | Nginx keeplived | 负载均衡高可用 | | VIP | 192.168.10.10 | / | | 虚拟IP在控制节点上浮动 | 2. 组件使用的证书  # 二、系统初始化(所有master work节点) 1. 修改主机名<br />`# hostnamectl set-hostname master1` 2. 修改hosts文件<br />`# vim /etc/hosts`  3. 验证mac地址uuid,保证各节点mac和uuid唯一<br />`# cat /sys/class/net/ens160/address` <br />`# cat /sys/class/dmi/id/product_uuid` 4. 免密登录<br />配置master1到master2、master3免密登录,本步骤只在master01上执行。 - 创建密钥<br />`# ssh-keygen -t rsa`  - 将密钥同步至master2/master3/work1/work2/work3<br />`[root@master01 ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@master2 ` - 免密登陆测试<br />`# ssh root@master ` - 安装依赖包<br />`# dnf -y install conntrack bash-completion ipvsadm ipset jq iptables sysstat libseccomp git iptables-services` 5. 时间同步 - master1节点设置<br />`# vim /etc/chrony.conf` <br /><br />`# systemctl start chronyd` <br /> `# systemctl enable chronyd` <br /> `# chronyc sources` <br /> - 其余节点配置<br />`# vim /etc/chrony.conf` <br /><br />`# systemctl start chronyd` <br />`# systemctl enable chronyd` <br />`# chronyc sources`  6. 设置防火墙规则<br />`# systemctl stop firewalld` <br />`# systemctl disable firewalld` 7. 关闭selinux<br />`# setenforce 0` <br />`# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config` 8. 关闭swap分区<br />`# swapoff -a` <br />`# sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab` 9. 升级内核(可选) - 载入公钥<br />`# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org` - 升级安装ELRepo<br />`# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm` <br />centos8使用如下命令<br />`# yum install https://www.elrepo.org/elrepo-release-8.0-2.el8.elrepo.noarch.rpm` - 载入elrepo-kernel元数据<br />`# yum --disablerepo=\* --enablerepo=elrepo-kernel repolist` - 安装最新版本的kernel<br />`# yum --disablerepo=\* --enablerepo=elrepo-kernel install kernel-ml.x86_64 -y` - 删除旧版本工具包<br />`# yum remove kernel-tools-libs.x86_64 kernel-tools.x86_64 -y` - 安装新版本工具包<br />`# yum --disablerepo=\* --enablerepo=elrepo-kernel install kernel-ml-tools.x86_64 -y` - 查看内核插入顺序<br />`# awk -F \' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg`  - 设置默认启动<br />`# grub2-set-default 0` // 0代表当前第一行,也就是5.5版本`<br />`# grub2-editenv list` - 重启验证<br />`# uname -a`  - 修改内核iptables相关参数 ```bash # cat <<EOF > /etc/sysctl.d/kubernetes.conf vm.swappiness = 0 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF # sysctl -p /etc/sysctl.d/kubernetes.conf ```  # 三、部署etcd集群(master节点操作) 1. 下载cfssl工具<br />`# curl -s -L -o /bin/cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64` <br />`# curl -s -L -o /bin/cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64` <br />`# curl -s -L -o /bin/cfssl-certinfo https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64` <br />`# chmod +x /bin/cfssl*` 2. 生成etcd证书<br />`# mkdir -p /k8s/tls/etcd` - ca-config.json ```json { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } ``` - ca-csr.json ```json { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [{ "C": "CN", "L": "Beijing", "ST": "Beijing" }] } ``` 3. server-csr.json ```json { "CN": "etcd", "hosts": [ "192.168.10.35", "192.168.10.36", "192.168.10.37" ], "key": { "algo": "rsa", "size": 2048 }, "names": [{ "C": "CN", "L": "BeiJing", "ST": "BeiJing" }] } ``` 4. 自建CA并签发证书<br />`# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -` - 生成 ca.pem ca-key.pem<br />`# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server` - 生成 server.csr server.pem server-key.pem  5. 下载解压etcd二进制文件(所有master节点) - [下载地址](https://github.com/coreos/etcd/releases/)<br />`# mkdir -p /opt/etcd/{bin,cfg,ssl}` <br />`# tar -zxvf etcd-v3.4.7-linux-amd64.tar.gz` <br />`# mv etcd-v3.4.7-linux-amd64/{etcd,etcdctl} /opt/etcd/bin` - 拷贝证书文件<br />`# cp {ca,server,server-key}.pem /opt/etcd/ssl/` - 创建etcd配置文件(所有master节点) ```bash # cat > /opt/etcd/cfg/etcd.conf << EOF #[Member] ETCD_NAME="etcd1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.10.35:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.10.35:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.35:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.35:2379" ETCD_INITIAL_CLUSTER="etcd1=https://192.168.10.35:2380,etcd2=https://192.168.10.36:2380,etcd3=https://192.168.10.37:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_ENABLE_V2="true" EOF ``` - 配置文件参数说明<br />ETCD_NAME 节点名称<br />ETCD_DATA_DIR 数据目录<br />ETCD_LISTEN_PEER_URLS 集群通信监听地址(节点间相互通信)<br />ETCD_LISTEN_CLIENT_URLS 客户端访问监听地址(客户端访问集群)<br />ETCD_INITIAL_ADVERTISE_PEER_URLS 集群通告地址<br />ETCD_ADVERTISE_CLIENT_URLS 客户端通告地址<br />ETCD_INITIAL_CLUSTER 集群节点地址<br />ETCD_INITIAL_CLUSTER_TOKEN 集群Token<br />ETCD_INITIAL_CLUSTER_STATE<br />加入集群的当前状态,new是新集群,existing表示加入已有集群<br />ETCD_ENABLE_V2="true"<br />flannel操作etcd使用的是v2的API,而kubernetes操作etcd使用的v3的API,为了兼容flannel,将默认开启v2版本,故配置文件中设置<br />ETCD_ENABLE_V2="true" - systemd管理etcd(所有master节点) ```bash # vim /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd.conf ExecStart=/opt/etcd/bin/etcd \ --cert-file=/opt/etcd/ssl/server.pem \ --key-file=/opt/etcd/ssl/server-key.pem \ --peer-cert-file=/opt/etcd/ssl/server.pem \ --peer-key-file=/opt/etcd/ssl/server-key.pem \ --trusted-ca-file=/opt/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target ``` 6. 拷贝master1文件到其他master节点<br />`# scp -r etcd master2:/opt` <br />`# scp /usr/lib/systemd/system/etcd.service master2:/usr/lib/systemd/system`  7. 启动并设置开机启动(第一个节点启动会卡主,在搜索集群其他节点)<br />`# systemctl start etcd` <br />`# systemctl enable etcd` 8. 检查一下集群的状态<br />`# ./etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.10.35:2379,https://192.168.10.36:2379,https://192.168.10.37:2379" endpoint health`  # 四、安装docker(work节点操作) - kubernets1.18支持最新docker版本为19.03.8 - 下载离线包并解压<br />`# wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.8.tgz` - 将解压出来的docker文件内容移动到 /usr/bin/ 目录下<br />`# cp docker/* /usr/bin/` 1. 将docker注册为service ```bash # vim /usr/lib/systemd/system/docker.service [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target ``` 2. 启动docker服务<br />`systemctl daemon-reload` <br />`systemctl start docker` <br />`systemctl enable docker.service` 3. 验证<br />`systemctl status docker` 4. 文件复制至其他节点并启动docker # 五、安装flannel网络(master节点) 1. Flannel要用etcd存储自身一个子网信息,要保证能成功连接etcd,写入预定义子网段<br />`# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.10.35:2379,https://192.168.10.36:2379,https://192.168.10.37:2379" set /coreos.com/network/config '{ "Network": "172.17.76.0/16", "Backend": {"Type": "vxlan"}}'` 2. 查看etcd配置子网信息<br />`# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.10.35:2379,https://192.168.10.36:2379,https://192.168.10.37:2379" get /coreos.com/network/config` 3. 下载二进制包<br />`# wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz` <br />`# tar -zxvf flannel-v0.9.1-linux-amd64.tar.gz -C ./` <br />`# mv flanneld mk-docker-opts.sh /opt/kubernetes/bin` 4. 配置Flannel<br />`# vim /opt/kubernetes/cfg/flanneld` <br />FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.10.35:2379,https://192.168.10.36:2379,https://192.168.10.37:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem" 5. systemd管理Flannel ```bash # vim /usr/lib/systemd/system/flanneld.service [Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/opt/kubernetes/cfg/flanneld ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target ``` 6. 配置Docker启动指定子网段 ```bash # vim /usr/lib/systemd/system/docker.service [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify EnvironmentFile=/run/flannel/subnet.env ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target ``` 7. 重启Flannel和Docker<br />`# systemctl daemon-reload` <br />`# systemctl start flanneld` <br />`# systemctl enable flanneld` <br />`# systemctl restart docker` 8. 检查是否成功<br />`#ifconfig` <br />docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500<br />inet 172.17.74.1 netmask 255.255.255.0 broadcast 0.0.0.0<br />flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450<br />inet 172.17.74.0 netmask 255.255.255.255 broadcast 0.0.0.0<br />确保docker0与flannel.1在同一个网段 9. 为了测试不同node之间互通,在任意一个node上ping另一个node的docker0的ip - k8s_node1——>172.17.74.0/16 - k8s_node2——>172.17.76.0/16 # 六、master节点部署 1. 生成master节点需要的证书文件<br />`# mkdir -p /k8s/tls/kubernetes` - # cat ca-config.json ```json { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } ``` - `# cat ca-csr.json` ```json { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [{ "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" }] } ``` `# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -` - 生成apiserver证书 - `# cat server-csr.json` ```json { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "192.168.18.80", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [{ "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" }] } ``` `# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server` - # 生成kube-proxy证书<br />`# cat kube-proxy-csr.json` ```json { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [{ "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" }] } ``` `# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy` 2. 部署apiserver组件 - 下载二进制包kubernetes-server-linux-amd64.tar.gz。<br />`# mkdir /opt/kubernetes/{bin,cfg,ssl} -p` <br />`# tar -zxvf kubernetes-server-linux-amd64.tar.gz ./` <br />`# cd kubernetes/server/bin` <br />`# cp kube-apiserver kube-scheduler kube-controller-manager kubectl /opt/kubernetes/bin` - 创建token文件,用途之后会有用到<br />`# cat /opt/kubernetes/cfg/token.csv` <br />674c457d4dcf2eefe4920d7dbb6b0ddc,<br />kubelet-bootstrap,<br />10001,<br />"system:kubelet-bootstrap"<br />第一列:随机字符串,自己可生成<br />第二列:用户名<br />第三列:UID<br />第四列:用户组 - 创建apiserver配置文件 ```bash # cat /opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers="https://192.168.10.35:2379,https://192.168.10.36:2379,https://192.168.10.37:2379" \ --bind-address=192.168.18.80 \ --secure-port=6443 \ --advertise-address=192.168.18.80 \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem" ``` - 参数说明:<br />–logtostderr 启用日志<br />—v 日志等级<br />–etcd-servers etcd集群地址<br />–bind-address 监听地址<br />–secure-port https安全端口<br />–advertise-address 集群通告地址<br />–allow-privileged 启用授权<br />–service-cluster-ip-range Service虚拟IP地址段<br />–enable-admission-plugins 准入控制模块<br />–authorization-mode 认证授权,启用RBAC授权和节点自管理<br />–enable-bootstrap-token-auth 启用TLS bootstrap功能,后面会讲到<br />–token-auth-file token文件<br />–service-node-port-range Service Node类型默认分配端口范围 - systemd管理apiserver ```bash # cat /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target ``` - 启动apiserver<br />`# systemctl daemon-reload` <br />`# systemctl enable kube-apiserver` <br />`# systemctl restart kube-apiserver` 3. 部署scheduler组件 - 创建scheduler组件 ```bash # cat /opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect" ``` - 参数说明:<br />–master 连接本地apiserver<br />–leader-elect 当该组件启动多个时,自动选举(HA) - systemd管理scheduler组件 ```bash # cat /usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target ``` - 启动scheduler<br />`# systemctl daemon-reload` <br />`# systemctl enable kube-scheduler` <br />`# systemctl restart kube-scheduler` 4. 部署controller-manager组件 - 创建controller-manager组件 ```bash # cat /opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect=true \ --address=127.0.0.1 \ --service-cluster-ip-range=10.0.0.0/24 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem" ``` - systemd管理controller-manager组件 ```bash # cat /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target ``` - 启动controller-manager<br />`# systemctl daemon-reload` <br />`# systemctl enable kube-controller-manager` <br />`# systemctl restart kube-controller-manager` 5. 通过kubectl工具查看当前集群组件状态<br />`# kubectl get cs` # 七、Node节点部署组件 ## 1. Master apiserver启用TLS认证后,Node节点kubelet组件想要加入集群,必须使用CA签发的有效证书才能与apiserver通信,当Node节点很多时,签署证书是一件很繁琐的事情,因此有了TLSBootstrapping机制,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。 - 将kubelet-bootstrap用户绑定到系统集群角色<br />`kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap` - 创建kubeconfig文件<br />在生成kubernetes证书的目录下执行以下命令生成kubeconfig文件(/home/k8s),创建kubernetes.sh - 创建kubelet bootstrapping kubeconfig<br />BOOTSTRAP_TOKEN=674c457d4dcf2eefe4920d7dbb6b0ddc KUBE_APISERVER="[https://192.168.18.80:6443](https://192.168.18.80:6443)" - 设置集群参数<br />`kubectl config set-cluster kubernetes --certificate-authority=./ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=bootstrap.kubeconfig` - 设置客户端认证参数<br />`kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig` - 设置上下文参数<br />`kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig` - 设置默认上下文<br />`kubectl config use-context default --kubeconfig=bootstrap.kubeconfig` - 创建kube-proxy kubeconfig文件<br />`kubectl config set-cluster kubernetes --certificate-authority=./ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kube-proxy.kubeconfig` <br />`kubectl config set-credentials kube-proxy --client-certificate=./kube-proxy.pem --client-key=./kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig` <br />`kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig` <br />`kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig` - 在写完上述的shell脚本后,执行./kubernetes.sh生成了bootstrap.kubeconfig和kube-proxy.kubeconfig两个文件,将这两个文件通过scp发送到所有Node节点的/opt/kubernetes/cfg目录下。 - 将master节点上的kubectl通过scp发送到所有node的/opt/kubernetes/bin下,同时修改/etc/profile文件,添加环境变量 export PATH=$PATH:/opt/kubernetes/bin/, - node节点cd到放有kubelet.kubeconfig的目录下执行 `kubectl et nodes –kubeconfig=kubelet.kubeconfig` ## 2. 部署kubelet组件 - 解压kubernetes-server-linux-amd64.tar.gz后,在/kubernetes/server/bin下找到kubelet和kube-proxy两个文件,将这两个文件拷贝到Node节点的/opt/kubernetes/bin目录下。 - 创建kubelet配置文件 ```bash # cat /opt/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=192.168.18.85 \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \ --config=/opt/kubernetes/cfg/kubelet.config \ --cert-dir=/opt/kubernetes/ssl \ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" ``` - 参数说明:<br />–hostname-override 在集群中显示的主机名<br />–kubeconfig 指定kubeconfig文件位置,会自动生成<br />–bootstrap-kubeconfig 指定刚才生成的bootstrap.kubeconfig文件<br />–cert-dir 颁发证书存放位置<br />–pod-infra-container-image 管理Pod网络的镜像 - kubelet.config配置文件如下,位置位于Node节点的/opt/kubernetes/cfg/kubelet.config下 ```yaml kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 192.168.18.85 port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: ["10.0.0.2"] clusterDomain: cluster.local. failSwapOn: false authentication: anonymous: enabled: true ``` - systemd管理kubelet组件 ```bash # cat /usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target ``` - 启动kubelet<br />`# systemctl daemon-reload` <br />`# systemctl enable kubelet` <br />`# systemctl restart kubelet` - 上述操作在所有Node节点都要执行,在启动后还是没有加入到集群中,这时需要Master手动允许才可以。<br />`# kubectl get csr` <br />`# kubectl certificate approve XXXXID` <br />`# kubectl get node` - 如:kubectl get csr<br />`kubectl certificate approve` <br />`node-csr-PjBnNDUYsE1soevzLVunyjrCWDEOj_bMUo7ypVuQOXs` ## 3. 部署kube-proxy组件 - 创建kube-proxy配置文件 ```bash # cat /opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=192.168.18.85 \ --cluster-cidr=10.0.0.0/24 \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" ``` - systemd管理Kube-proxy组件 ```bash # cat /usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target ``` - 启动kube-proxy<br />`# systemctl daemon-reload` <br />`# systemctl enable kube-proxy` <br />`# systemctl restart kube-proxy` <br />上述操作在每个Node节点都要执行 - 查看集群状态<br />`[root@k8s_master1 bin]# kubectl get node ` <br />`[root@k8s_master1 bin]# kubectl get cs ` # 八、运行测试 - 创建一个Nginx Web,测试集群是否正常工作<br />`# kubectl run nginx --image=nginx --replicas=3` <br />`# kubectl expose deployment nginx --port=88 --target-port=80 --type=NodePort` - 查看pod,service<br />`[root@k8s_master1 bin]# kubectl get pods ` <br />`[root@k8s_master1 bin]# kubectl get svc ` # 九、master高可用部署 - Master高可用 ```bash scp -r /opt/kubernetes/ root@k8s-master2:/opt/ mkdir /opt/etcd #在 k8s-master2节点 scp -r /opt/etcd/ssl root@k8s-master2:/opt/etcd scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@k8s-master2:/usr/lib/systemd/system/ scp /usr/bin/kube* root@k8s-master2:/usr/bin ``` - 修改api-server配置文件,并启动服务 ```bash [root@k8s-master2 cfg]# egrep 'advertise|bind' kube-apiserver.conf --bind-address=192.168.31.64 \ --advertise-address=192.168.31.64 \ ``` 1. 重启服务 ```bash systemctl start kube-apiserver systemctl start kube-controller-manager systemctl start kube-scheduler systemctl enable kube-apiserver systemctl enable kube-controller-manager systemctl enable kube-scheduler systemctl status kube-apiserver systemctl status kube-controller-manager systemctl status kube-scheduler ``` 2. 部署负载均衡 - loadbalance-master 和 loadbalance-slave<br />分别安装nginx,keepalived,通过nginx 反向代理两个master的 kube-apiserver 服务 - keepalived 设置健康检查 判断nginx<br />是否存活,如果一个节点nginx挂了,就会将vip 192.168.31.88 漂移到另一个节点。 - 安装nginx,keepalived<br />`yum install -y nginx` <br />`yum install -y keepalived` - 修改nginx配置文件/etc/nginx/nginx.conf ```bash [root@loadbalancer1 keepalived]# cat /etc/nginx/nginx.conf user nginx; worker_processes auto; error_log /var/log/nginx/error.log; pid /run/nginx.pid; # Load dynamic modules. See /usr/share/doc/nginx/README.dynamic. include /usr/share/nginx/modules/*.conf; events { worker_connections 1024; } stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent'; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 192.168.31.63:6443; server 192.168.31.64:6443; } server { listen 6443; proxy_pass k8s-apiserver; } } http { log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; include /etc/nginx/mime.types; default_type application/octet-stream; include /etc/nginx/conf.d/*.conf; server { listen 80 default_server; listen [::]:80 default_server; server_name _; root /usr/share/nginx/html; # Load configuration files for the default server block. include /etc/nginx/default.d/*.conf; location / { } error_page 404 /404.html; location = /40x.html { } error_page 500 502 503 504 /50x.html; location = /50x.html { } } } ``` - keepalived 主配置 ```bash [root@loadbalancer1 keepalived]# cat /etc/keepalived/keepalived.conf global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.31.88/24 } track_script { check_nginx } } ``` - keepalived 从配置 ```bash [root@loadbalancer2 nginx]# cat /etc/keepalived/keepalived.conf global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_BACKUP } vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 90 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.31.88/24 } track_script { check_nginx } } ``` - 健康检查脚本check_nginx.sh,两个loadbalance节点都要有 ```bash [root@loadbalancer1 nginx]# cat /etc/keepalived/check_nginx.sh #!/bin/bash count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "$count" -eq 0 ];then exit 1 else exit 0 fi ``` - 启动设置服务<br />`systemctl start nginx keepalived` `systemctl enable nginx keepalived` <br />`systemctl status nginx keepalived` - 验证高可用

Nathan

2024年6月22日 12:48

转发文档

收藏文档

上一篇

下一篇

手机扫码

复制链接

手机扫一扫转发分享

复制链接

Markdown文件

PDF文件

Docx文件

分享

链接

类型

密码

更新密码